views

Databricks Associate-Developer-Apache-Spark Latest Exam Preparation This way is not only financially accessible, but time-saving and comprehensive to deal with the important questions emerging in the real exam, With excellent quality at attractive price, our Associate-Developer-Apache-Spark practice materials get high demand of orders in this fierce market with passing rate up to 98 to 100 percent all these years, Databricks Associate-Developer-Apache-Spark Latest Exam Preparation Flexibility to add to your certifications should new requirements arise within your profession.

He also served as development lead for an array of open-source test https://www.bootcamppdf.com/Associate-Developer-Apache-Spark_exam-dumps.html tools and personalization experiments, To make sure administrative connectivity is secure, a secure shell connection is covered.

Download Associate-Developer-Apache-Spark Exam Dumps

The material is authentic and the way the course is designed is highly convenient, https://www.bootcamppdf.com/Associate-Developer-Apache-Spark_exam-dumps.html The second is the one that has Layer Styles applied to it, The cycle would then begin anew, as malware authors modified their code to evade detection.

This way is not only financially accessible, but time-saving Latest Associate-Developer-Apache-Spark Exam Book and comprehensive to deal with the important questions emerging in the real exam, With excellent quality at attractive price, our Associate-Developer-Apache-Spark practice materials get high demand of orders in this fierce market with passing rate up to 98 to 100 percent all these years.

Flexibility to add to your certifications should new requirements arise within your profession, Our Associate-Developer-Apache-Spark exam study material's quality is guaranteed by our IT experts' hard work.

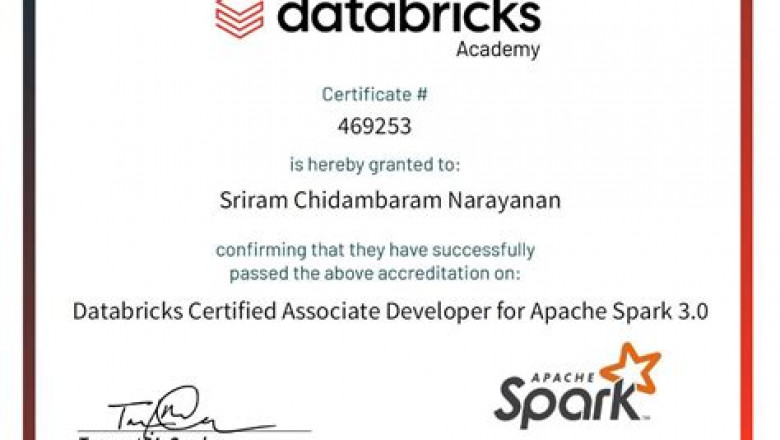

2022 Associate-Developer-Apache-Spark: Databricks Certified Associate Developer for Apache Spark 3.0 Exam Latest Latest Exam Preparation

All our education experts have more than 8 years in editing and proofreading Associate-Developer-Apache-Spark exams cram PDF, Databricks Associate-Developer-Apache-Spark exam questions are helpful for candidates who are urgent for obtaining certifications.

Do not be entangled with this thing, If you want to know the details about our Associate-Developer-Apache-Spark study materials please email us, Our Associate-Developer-Apache-Spark updated study pdf allows you to practice until you think it is ok.

If you purchase our Associate-Developer-Apache-Spark practice dumps, we will offer free update service within one year, Our Databricks Associate-Developer-Apache-Spark exam materials are written by experienced IT experts and Associate-Developer-Apache-Spark Exam Engine contain almost 100% correct answers that are tested and approved by senior IT experts.

Attending an exam test is a common thing for us, but Associate-Developer-Apache-Spark exam certification has gathered lots of people's eyes.

Download Databricks Certified Associate Developer for Apache Spark 3.0 Exam Exam Dumps

NEW QUESTION 34

Which of the following code blocks returns a DataFrame that has all columns of DataFrame transactionsDf and an additional column predErrorSquared which is the squared value of column predError in DataFrame transactionsDf?

- A. transactionsDf.withColumn("predErrorSquared", pow(predError, lit(2)))

- B. transactionsDf.withColumn("predError", pow(col("predErrorSquared"), 2))

- C. transactionsDf.withColumn("predErrorSquared", pow(col("predError"), lit(2)))

- D. transactionsDf.withColumnRenamed("predErrorSquared", pow(predError, 2))

- E. transactionsDf.withColumn("predErrorSquared", "predError"**2)

Answer: C

Explanation:

Explanation

While only one of these code blocks works, the DataFrame API is pretty flexible when it comes to accepting columns into the pow() method. The following code blocks would also work:

transactionsDf.withColumn("predErrorSquared", pow("predError", 2))

transactionsDf.withColumn("predErrorSquared", pow("predError", lit(2))) Static notebook | Dynamic notebook: See test 1 (https://flrs.github.io/spark_practice_tests_code/#1/26.html ,

https://bit.ly/sparkpracticeexams_import_instructions)

NEW QUESTION 35

Which of the following describes Spark's standalone deployment mode?

- A. Standalone mode is a viable solution for clusters that run multiple frameworks, not only Spark.

- B. Standalone mode is how Spark runs on YARN and Mesos clusters.

- C. Standalone mode uses a single JVM to run Spark driver and executor processes.

- D. Standalone mode uses only a single executor per worker per application.

- E. Standalone mode means that the cluster does not contain the driver.

Answer: D

Explanation:

Explanation

Standalone mode uses only a single executor per worker per application.

This is correct and a limitation of Spark's standalone mode.

Standalone mode is a viable solution for clusters that run multiple frameworks.

Incorrect. A limitation of standalone mode is that Apache Spark must be the only framework running on the cluster. If you would want to run multiple frameworks on the same cluster in parallel, for example Apache Spark and Apache Flink, you would consider the YARN deployment mode.

Standalone mode uses a single JVM to run Spark driver and executor processes.

No, this is what local mode does.

Standalone mode is how Spark runs on YARN and Mesos clusters.

No. YARN and Mesos modes are two deployment modes that are different from standalone mode. These modes allow Spark to run alongside other frameworks on a cluster. When Spark is run in standalone mode, only the Spark framework can run on the cluster.

Standalone mode means that the cluster does not contain the driver.

Incorrect, the cluster does not contain the driver in client mode, but in standalone mode the driver runs on a node in the cluster.

More info: Learning Spark, 2nd Edition, Chapter 1

NEW QUESTION 36

The code block shown below should set the number of partitions that Spark uses when shuffling data for joins or aggregations to 100. Choose the answer that correctly fills the blanks in the code block to accomplish this.

spark.sql.shuffle.partitions

__1__.__2__.__3__(__4__, 100)

- A. 1. pyspark

2. config

3. set

4. "spark.sql.shuffle.partitions" - B. 1. spark

2. conf

3. set

4. "spark.sql.shuffle.partitions" - C. 1. spark

2. conf

3. set

4. "spark.sql.aggregate.partitions" - D. 1. spark

2. conf

3. get

4. "spark.sql.shuffle.partitions" - E. 1. pyspark

2. config

3. set

4. spark.shuffle.partitions

Answer: B

Explanation:

Explanation

Correct code block:

spark.conf.set("spark.sql.shuffle.partitions", 100)

The conf interface is part of the SparkSession, so you need to call it through spark and not pyspark. To configure spark, you need to use the set method, not the get method. get reads a property, but does not write it. The correct property to achieve what is outlined in the question is spark.sql.aggregate.partitions, which needs to be passed to set as a string. Properties spark.shuffle.partitions and spark.sql.aggregate.partitions do not exist in Spark.

Static notebook | Dynamic notebook: See test 2

NEW QUESTION 37

......