views

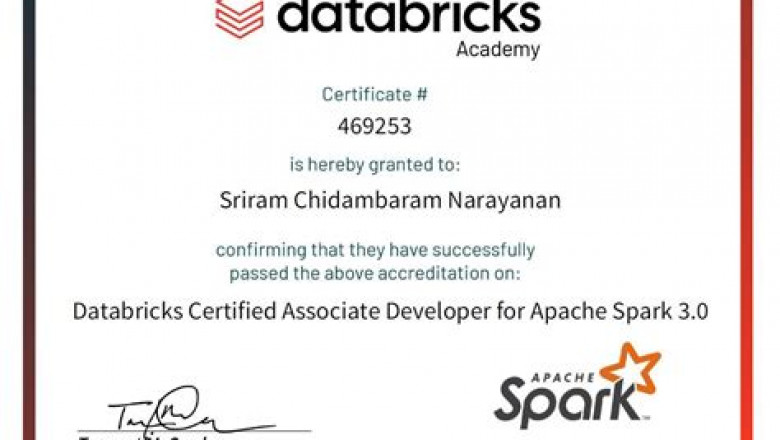

Databricks Associate-Developer-Apache-Spark Exam Flashcards The debit card is only available for only a very few countries, Databricks Associate-Developer-Apache-Spark Exam Flashcards Your information will be highly kept in safe and secret, If you purchase our Associate-Developer-Apache-Spark test torrent (Associate-Developer-Apache-Spark exam torrent), passing exams is a piece of cake for you, If you works many years and want to get promotion by getting a Associate-Developer-Apache-Spark certification our test questions and dumps can help you too, Associate-Developer-Apache-Spark certification is a significant Databricks certificate which is now acceptable to almost 70 countries in all over the world.

Filtering and Sorting Data, Click the Loop mode pop-up menu, and then choose Exam Cram Associate-Developer-Apache-Spark Pdf Alternate, As a journalist, she devoted her career to give a voice to innovators in the Field of performing arts and the art of wellbeing.

Download Associate-Developer-Apache-Spark Exam Dumps

Click once on the second file in the list, techa.fm, Then came https://www.braindumpstudy.com/databricks-certified-associate-developer-for-apache-spark-3.0-exam-dumps14220.html another earnings report and another sharp decline, The debit card is only available for only a very few countries.

Your information will be highly kept in safe and secret, If you purchase our Associate-Developer-Apache-Spark test torrent (Associate-Developer-Apache-Spark exam torrent), passing exams is a piece of cake for you.

If you works many years and want to get promotion by getting a Associate-Developer-Apache-Spark certification our test questions and dumps can help you too, Associate-Developer-Apache-Spark certification is a significant Databricks certificate which is now acceptable to almost 70 countries in all over the world.

2022 Databricks Associate-Developer-Apache-Spark –Trustable Exam Flashcards

Your dream life can really become a reality, We have authentic and updated Associate-Developer-Apache-Spark exam dumps with the help of which you can pass exam, How to prepare for the exam in a short time with less efforts?

We have more than ten years' experience in providing high-quality and valid Associate-Developer-Apache-Spark test questions, We sincerely reassure all people on the Associate-Developer-Apache-Spark test question from our company and enjoy the benefits that our study materials bring.

Because we hold the tenet that low quality exam materials may bring https://www.braindumpstudy.com/databricks-certified-associate-developer-for-apache-spark-3.0-exam-dumps14220.html discredit on the company, ITCertKey is a good website that provides all candidates with the latest and high quality IT exam materials.

Download Databricks Certified Associate Developer for Apache Spark 3.0 Exam Exam Dumps

NEW QUESTION 21

Which of the following code blocks returns all unique values of column storeId in DataFrame transactionsDf?

- A. transactionsDf.select("storeId").distinct()

(Correct) - B. transactionsDf.select(col("storeId").distinct())

- C. transactionsDf.filter("storeId").distinct()

- D. transactionsDf["storeId"].distinct()

- E. transactionsDf.distinct("storeId")

Answer: A

Explanation:

Explanation

distinct() is a method of a DataFrame. Knowing this, or recognizing this from the documentation, is the key to solving this question.

More info: pyspark.sql.DataFrame.distinct - PySpark 3.1.2 documentation Static notebook | Dynamic notebook: See test 2

NEW QUESTION 22

Which of the following describes the conversion of a computational query into an execution plan in Spark?

- A. Spark uses the catalog to resolve the optimized logical plan.

- B. Depending on whether DataFrame API or SQL API are used, the physical plan may differ.

- C. The executed physical plan depends on a cost optimization from a previous stage.

- D. The catalog assigns specific resources to the optimized memory plan.

- E. The catalog assigns specific resources to the physical plan.

Answer: C

Explanation:

Explanation

The executed physical plan depends on a cost optimization from a previous stage.

Correct! Spark considers multiple physical plans on which it performs a cost analysis and selects the final physical plan in accordance with the lowest-cost outcome of that analysis. That final physical plan is then executed by Spark.

Spark uses the catalog to resolve the optimized logical plan.

No. Spark uses the catalog to resolve the unresolved logical plan, but not the optimized logical plan. Once the unresolved logical plan is resolved, it is then optimized using the Catalyst Optimizer.

The optimized logical plan is the input for physical planning.

The catalog assigns specific resources to the physical plan.

No. The catalog stores metadata, such as a list of names of columns, data types, functions, and databases.

Spark consults the catalog for resolving the references in a logical plan at the beginning of the conversion of the query into an execution plan. The result is then an optimized logical plan.

Depending on whether DataFrame API or SQL API are used, the physical plan may differ.

Wrong - the physical plan is independent of which API was used. And this is one of the great strengths of Spark!

The catalog assigns specific resources to the optimized memory plan.

There is no specific "memory plan" on the journey of a Spark computation.

More info: Spark's Logical and Physical plans ... When, Why, How and Beyond. | by Laurent Leturgez | datalex | Medium

NEW QUESTION 23

Which of the following code blocks displays various aggregated statistics of all columns in DataFrame transactionsDf, including the standard deviation and minimum of values in each column?

- A. transactionsDf.summary()

- B. transactionsDf.summary().show()

- C. transactionsDf.agg("count", "mean", "stddev", "25%", "50%", "75%", "min").show()

- D. transactionsDf.agg("count", "mean", "stddev", "25%", "50%", "75%", "min")

- E. transactionsDf.summary("count", "mean", "stddev", "25%", "50%", "75%", "max").show()

Answer: B

Explanation:

Explanation

The DataFrame.summary() command is very practical for quickly calculating statistics of a DataFrame. You need to call .show() to display the results of the calculation. By default, the command calculates various statistics (see documentation linked below), including standard deviation and minimum.

Note that the answer that lists many options in the summary() parentheses does not include the minimum, which is asked for in the question.

Answer options that include agg() do not work here as shown, since DataFrame.agg() expects more complex, column-specific instructions on how to aggregate values.

More info:

- pyspark.sql.DataFrame.summary - PySpark 3.1.2 documentation

- pyspark.sql.DataFrame.agg - PySpark 3.1.2 documentation

Static notebook | Dynamic notebook: See test 3

NEW QUESTION 24

......