views

We search many things on the internet daily to purchase something, for comparing one product with another, how to decide that a specific product is superior to another? – We straight away check the reviews as well as see how many stars or positive feedbacks have been provided to the products.

Here, we will extract reviews from amazon.com with how many stars it has got, who had posted the reviews, etc.

We would be saving the data in CSV or an excel spreadsheet. The data fields we will scrape include:

- Review’s Title

- Ratings

- Reviewer’s Name

- Review’s Description or Content

- Useful Counts

Now, let’s start.

We choose Scrapy – a Python framework used for big-scale web data scraping. Together with it, a few other packages would be needed to extract Amazon products data.

- Requests – for sending request of the URL

- Pandas – for exporting CSV

- Pymy SQL – for connecting My SQL server as well as storing data there

- Math – for implementing mathematical operations

You may always install these packages like given below using conda or pip.

pip install scrapy or conda intall -c conda-forge scrapy

Let’s describe Start URL for scraping sellers’ links

Let’s initially observe what it’s like for scraping Amazon reviews for a single product.

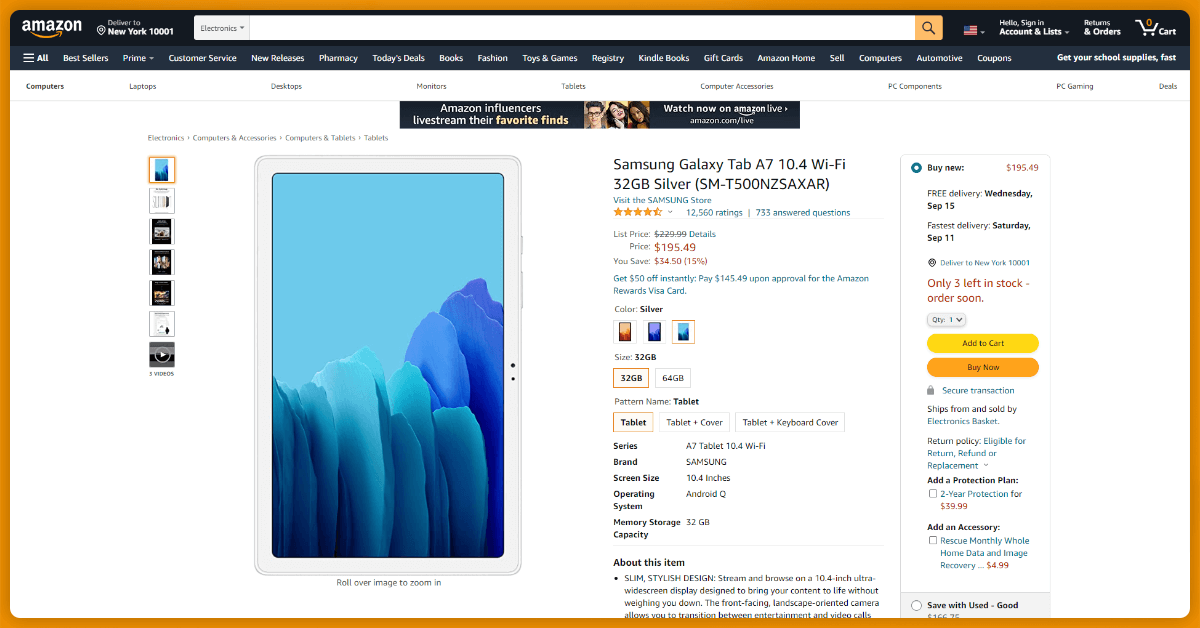

We have taken the URL: https://www.amazon.com/dp/B07N9255CG

This will be shown in the below image.

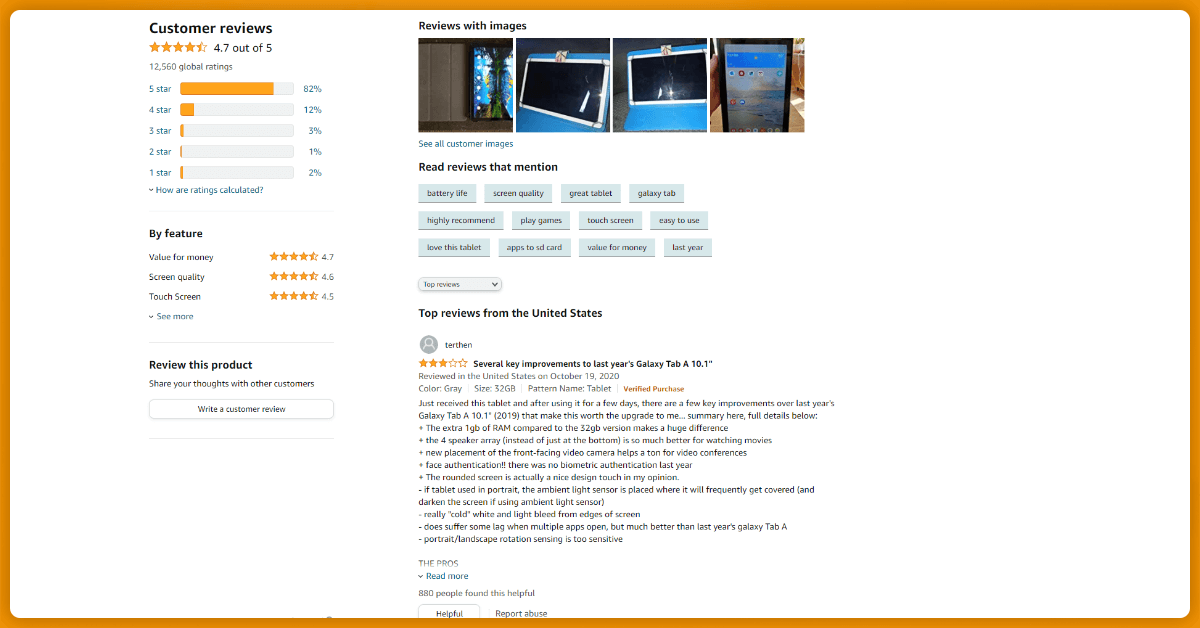

When we go to the reviews section, it’ll appear like an image given below. This might have a few different names within reviews.

However, in case, you carefully review those requests while loading a page as well as play a bit with the previous as well as next page of the reviews, you may have noticed that there’s the post request loading having all the content on a page.

Now, we’ll look at the required payloads and headers for any successful reply. In case, you have properly inspected the pages, then you’ll understand the differences between shifting a page as well as how that reflects on requests past that.

NEXT PAGE --- PAGE 2 https://www.amazon.com/hz/reviews-render/ajax/reviews/get/ref=cm_cr_arp_d_paging_btm_ next_2 Headers: accept: text/html,*/* accept-encoding: gzip, deflate, br accept-language: en-US,en;q=0.9 content-type: application/x-www-form-urlencoded;charset=UTF-8 origin: https://www.amazon.com referer: https://www.amazon.com/Moto-Alexa-Hands-Free-camera-included/productreviews/B07N9255CG?ie=UTF8&reviewerType=all_reviews user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36 x-requested-with: XMLHttpRequest Payload: reviewerType: all_reviews pageNumber: 2 shouldAppend: undefined reftag: cm_cr_arp_d_paging_btm_next_2 pageSize: 10 asin: B07N9255CG PREVIOUS PAGE --- PAGE 1 https://www.amazon.com/hz/reviewsrender/ajax/reviews/get/ref=cm_cr_getr_d_paging_btm_prev _1 Headers: accept: text/html,*/* accept-encoding: gzip, deflate, br accept-language: en-US,en;q=0.9 content-type: application/x-www-form-urlencoded;charset=UTF-8 origin: https://www.amazon.com referer: https://www.amazon.com/Moto-Alexa-Hands-Free-camera-included/ productreviews/B07N9255CG/ ref=cm_cr_arp_d_paging_btm_next_2? ie=UTF8&reviewerType=all_reviews& pageNumber=2 user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36 x-requested-with: XMLHttpRequest Payload: reviewerType: all_reviews pageNumber: 2 shouldAppend: undefined reftag: cm_cr_arp_d_paging_btm_next_2 pageSize: 10 asin: B07N9255CG

The Important Part: CODE or Script

There are a couple of different ways of making the script:

- Make a complete Scrapy project

- Just make the collection of files to narrow down a project size

During the previous tutorial, we had shown you the whole Scrapy project and information to create and also modify that. We have selected the most pointed way possible this time. We will use a collection of files and all the Amazon reviews will be available there!!

Because we use Scrapy & Python for extracting all the reviews, it’s easier to take the road of the xpath.

A most substantial part of the xpath is catching the pattern. As for copying related xpaths from the Google review window and also paste that, it’s extremely easy but pretty old school as well as also not efficient each time.

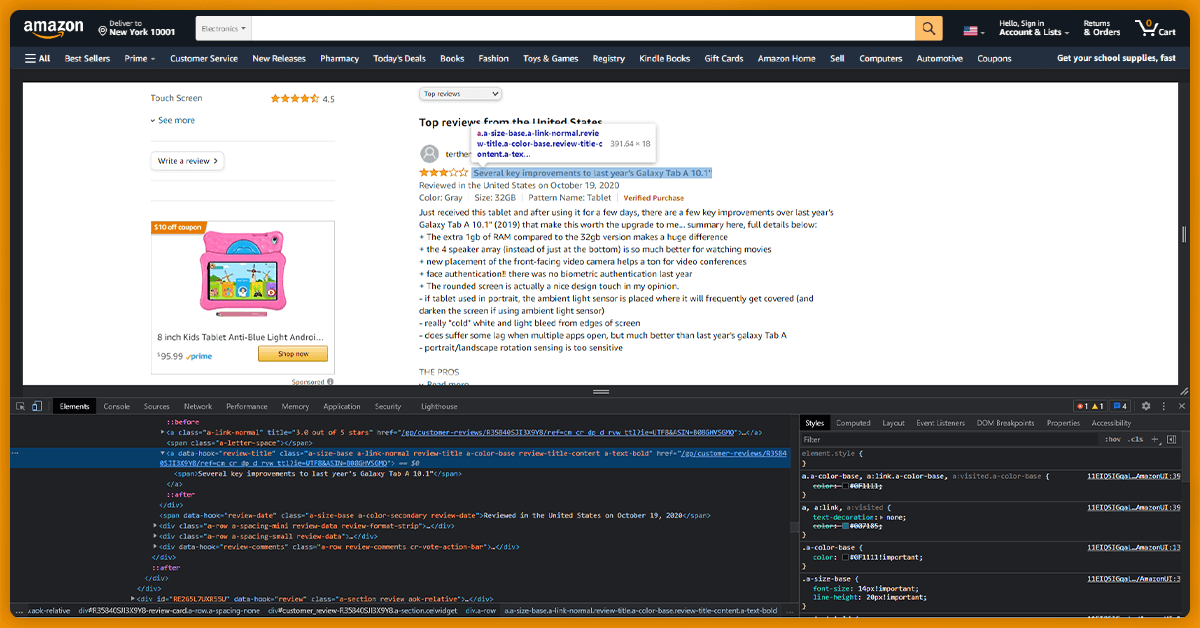

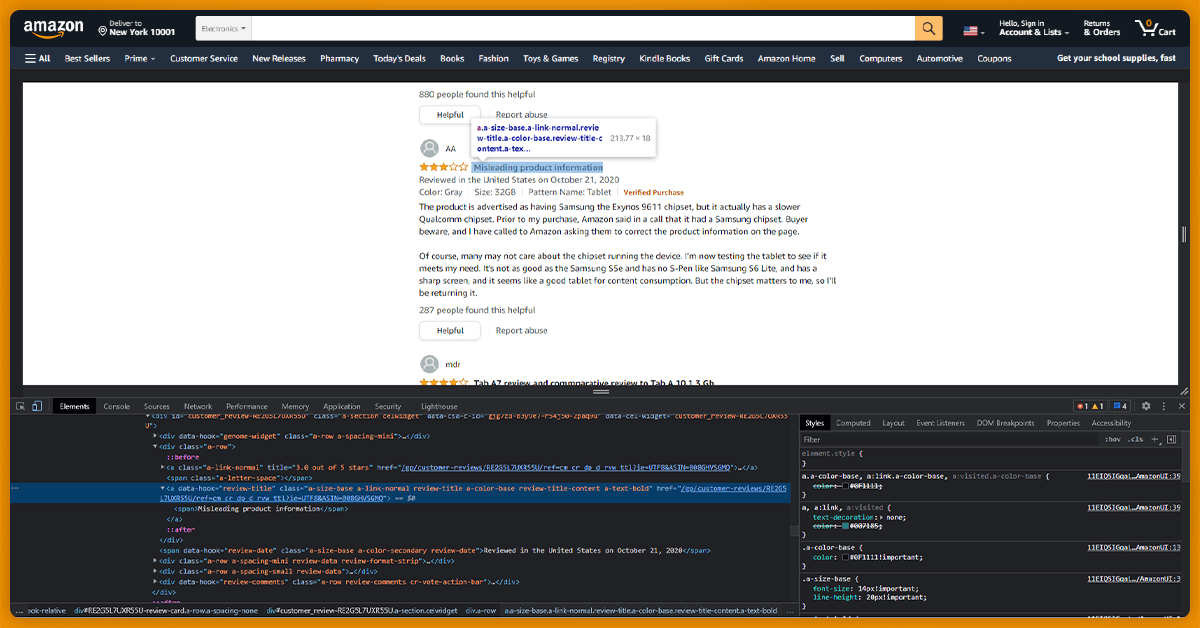

That’s what we will do here. We’ll observe the xpath for similar field, so let’s indicate “Review Title” as well as observe how that makes a pattern or something like that to narrow down the xpath.

There are a couple of examples of the similar xpath given here.

Here, you can see that there are parallel attributes to a tag that has data about the “Review Title”.

Therefore, resulting xpath the for Review Titles would be,

//a[contains(@class,"review-title-content")]/span/text()

Just like that we’ve provided all the xpaths for different fields that we will scrape.

- Review Title: //a[contains(@class,"review-title-content")]/span/text()

- Ratings: //a[contains(@title,"out of 5 stars")]/@title

- Reviewer’s Name: //div[@id="cm_cr-review_list"]//span[@class="a-profile-name"]/text()

- Review Description/Content: //span[contains(@class,"review-text-content")]/span/text()

- Helpful Counts: /span[contains(@class,"cr-vote-text")]/text()

Clearly, some joining and stripping to end results with some xpath is important to get ideal data. In addition, don’t overlook to remove additional white spaces.

As we have already seen how to go through the pages as well as also about how to scrape data from them, it is time to collect those!!

Here is the entire code given for scraping all the reviews for a single product!!!

import math, requests, json, pymysql

from scrapy.http import HtmlResponse

import pandas as pd

con = pymysql.connect ( 'localhost', 'root', 'password','database' )

raw_dataframe = [ ]

res = requests.get( 'https://www.amazon.com/Moto-Alexa-Hands-Free-camera-included/ product-reviews/B07N9255CG?ie=UTF8&reviewerType.all_reviews' )

response = HtmlResponse( url=res.url,body=res.content )

product_name = response.xpath( '//h1/a/text()').extract_first( default=' ' ).strip()

total_reviews = response.xpath('//span[contains(text(),"Showing")]/text()').extract_first(default='').strip().split()[-2]]

total_pages = math.ceil(int(total_reviews)/10)

for i in range(0,total_pages):

url = f"https//www.amazon.com/hz/reviews-render/ajax/reviews/get/ref=cm_crarp_d_paging_btm_next_{str(i+2)}"

head = {'accept': 'text/html, */*',

'accept-encoding': 'gzip,deflate,br',

'accept-language': 'en-US,en;q=0.9',

'content-type': 'application/x-www-form-urlencoded;charset=UTF-8', 'origin': 'https://www.amazon.com,

'referer':response.url,

'user-agent': 'Mozilla/5.0(Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KWH, like Gecko) Chrome/81.0.4044.113 Safari/537.36',

'x- requested-with': 'XMLHttpRequest'

}

payload = {'reviewerType':'all_reviews'

'pageNumber': i+2,

'shouldAppend': 'undefined',

'reftag': f'cm_crarp_d_paging_btm_next_{str(i+2))',

'pageSize': 10,

'asin': '807N9255C',

}

res = requests.post(url,headers=head,data=json.dumps(payload))

response = HtmlResponse(url=res.url, body=res.content)

loop = response.xpath('//div[contains(@class,"a-section review")]')

for part in loop:

review_title = part.xpath('.//a[contains(@Class,"review-title-content")]/span/text()').extract_first(default=' ').strip()

rating =part.xpath('.//a[contains(@title,"out of 5 stars")]/@title').extract_first(default=' ').strip().split()[0].strip()

reviewername = part.xpath('.//span[@class."a-profile-name']/text()').extract_first(default=' ').strip()

description =''.join(part. xpath('.//span[contains(@class,"review-text-content")]/span/text()') .extract()).strip()

helpful_count =part.xpath('.//span[contains(@class,"cr-vote-text")]/ text()').extract_first(default ='').strip().split()[0].strip()

raw_dataframe.append([product_name,review_title,rating,reviewer_name, description,helpful_count])

df =pd.Dataframe,(raw_dataframe,columns['Product Name','Review Title','Review Rating','Reviewer Name','Description','Helpful Count' ]),

#inserting into mySQL table

df.to_sql("review_table",if_exists='append',con=con)

#exporting csv

df.to_csv("amazon reviews.csv",index=None)

Points to Consider While Extracting Amazon Reviews

The entire procedure looks extremely easy to use but there could be some problems while scraping that like response issues, captcha issues, and more. To avoid the same, you need to keep a few vpns or proxies handy to make the process much smoother.

In addition, there are many times when a website changes its structure. In case, the scraping is going to be a long run, then, you need to always keep the error logs within your script, or error alerts would also help and you could be well aware of it the moment when structure gets changed.

Conclusion

All types of review scraping are much more helpful.

To observe product views by the customers in case, you are the seller on the same website.

Also for monitoring, new party sellers

For creating a dataset that is utilized for research for the academic or business objective?

Contact ReviewGators for more information or ask for a free quote!